This guest post by Bill Hunt, President and Founder at Back Azimuth, shows how centralized management of index coverage can uncover and resolve problems you might not have known you had.

Index coverage is a key issue for global and multinational websites. Website pages that are never indexed by search engines can’t appear in search results. This has obvious detrimental effects on visibility and revenue due to the negative impact on hreflang elements.

Products that don’t appear in search results don’t sell as well. Where global sites are concerned, if no local language or country page is indexed, the global page or a different country’s page can show up instead, causing confusion and frustration for consumers. Imagine the Australian searcher that clicks on your listing in Google and comes to a page with the price in British Pounds—or even worse, a price with the dollar sign, but it is actually US dollars and not Australian.

Google has demonstrated the importance of index coverage by making multiple sets of information available in their Search Console. These reports show what they have indexed, what they have excluded, and multiple types of errors. It is a literal to do list for site clean-up. That is why one of the first things on any technical SEO checklist is to check the coverage report and indexing rate for a website. (Google has left the International Targeting report so you can fix the pages; they cannot find hreflang alternates for you, since they are not indexed.)

Unfortunately for many global brands this never happens.

Despite the problems that can arise from index coverage issues and their direct impact on a business’s online revenue, many companies with extended brands and global sites do not have a solid protocol for managing and monitoring this critical function.

Here are two examples of problems where a centrally managed solution was necessary to ensure the site was performing correctly.

Company #1 – Missing products in global ecommerce markets

Website profile

- Industry: Global ecommerce

- Number of country/language versions: 44

- Number of SKU (products) per country: 3000

The problem: missing products and unreliable CMS-generated sitemaps

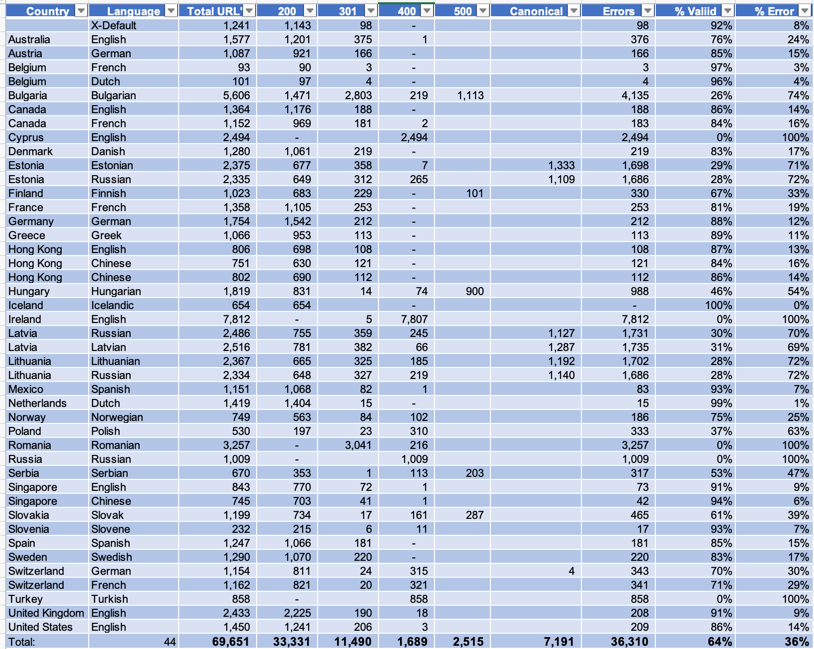

We imported all of the XML sitemaps into HREFLang Builder. We expected 3000 URLs in each site’s XML, for a total of about 132,000 URLs.

However, none of the markets had 3000 URLs. On average, sites had just 1500 or about half of what was expected. To further complicate things, of the URLs listed, 36% had some sort of non-indexable status code.

Across all 44 markets, an average of only 25% of all URLs were submitted via sitemaps. We were forced to conclude that these CMS-generated sitemaps were unreliable and had to find a solution to augment XML sitemaps as our URL source. The best option was to use the valid URLs from an SEO diagnostic tool like Oncrawl. This allowed us to capture and merge crawlable URLs with those from the CMS to get the largest set of valid URLs.

Company #2 – Major issue of wrong market pages showing in search results

Website profile

- Industry: Multinational consumer brand

- Number of country/language versions: 165

Observed symptom: wrong market pages in search results

The second project started as a multinational consumer brand wanting to solve a major issue of the wrong market pages appearing in search results. For example, in Thailand the global site was showing up rather than the Thai brand site. In Morocco, twelve different country sites were represented for their assortment of keywords and only one Moroccan page appeared in the results. This was causing confusion and frustration for consumers and brand managers in the various markets.

The problem: missing URLs, old infrastructure, and difficult hreflang implementation

Implementing hreflang tags proved to be more difficult than the SEO team expected. The majority of the sites were on separate CMS systems. The naming conventions varied significantly from site to site, making it impossible to use exact match page names. It was eventually deemed impossible to use hreflang tags on the pages themselves.

This made them a perfect candidate for HREFLang Builder.

Due to the varied domain and site structures, Black Azimuth needed to develop a country-language intake matrix that lists the website, country, language and URL source.

The first thing we found was that nearly 70% of the sites did not have any XMLl sitemaps. Of these, nearly a third were powered by old CMS systems that could not develop them.

The remaining sites had quality problems like the first case study, or only listed a small portion of the URLs.

In the screen capture below from our “Page Alignment Tool”, the discrepancy in page counts from one source to another is evident:

- The SEO crawler found just over 11,000 indexable URLs with a 200 status code.

- The CMS only reported 944.

- The client was tracking 46 URLs as “Preferred Landing Pages” for mission critical keywords in their rank checking tool.

After merging the lists of URLs from the different sources—sitemaps, crawl data, and CMSs—there were only 440 URLs reported by all three sources. Nearly 10,000 URLs, including more than half of the client’s list of critical URLs were missing from the CMS-generated XML sitemaps.

Collaborating with global DevOps teams to find solutions

In both of these projects, the global DevOps team realized they needed to find a way to centrally manage their XML sitemaps and HREFLang ensure as many pages as possible were submitted and indexed by Google.

They reached out for assistance and in collaboration with Black Azimuth’s teams, they broke the solution down into a series of challenges:

- Challenge 1 – Getting a master list of all URLs for each site

- Challenge 2 – Creating HREFLang XML Sitemaps

- Challenge 3 – Submitting them to Google

- Challenge 4 – Maintaining the XML Sitemaps

- Challenge 5 – Monitoring Outcome

HREFLang Builder and Oncrawl as a solution for market alignment in search

Setup: addressing challenges of centralization and automation

Due to the decentralized nature of the site hosting and IT support across the markets, the idea that we could get files uploaded to local servers was quickly dismissed.

Instead, the plan was to create a central host for the XML files. This solved a major part of the second goal of automation. By using a domain outside of the core site, HREFLang Builder could automatically upload updates.

For security reasons it was decided to use a dedicated domain to host XML sitemaps per domain and leverage cross-domain verification. To enable the cross-domain verification we needed to create Google Search Console accounts for all websites in a master GSC account. Again, the distributed nature of websites made uploading an html file or tagging the pages impossible.

Except for a few domains, all were managed and registered centrally by the global DevOps team. In less than a day they were able to submit and record validations, shaving months off the estimated time to get traditional validations.

Challenge 1 – Getting a master list of all URLs for each site

One of the biggest challenges was to create a master list of indexable URLs for each site.

We would use the existing XML sitemaps, list of priority URLs and outputs from diagnostic crawls.

The great news was that the internal SEO teams at both companies were using Oncrawl as their preferred SEO diagnostic tool. We set up each market site in Oncrawl and leveraged their robust API to allow HREFLang Builder to import their “200 Indexable” report of valid URLs seamlessly into HREFLang Builder.

Challenge 2 – Creating HREFLang XML Sitemaps

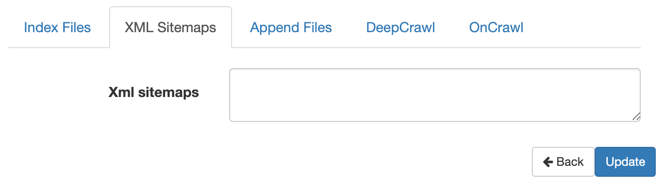

With all of the sites set up in Oncrawl, we connected to the API and then added in the master list of XML sitemaps and index files in HREFLang Builder’s AutoUpdate URL Import Manager.

We imported the URLs from all sources into HREFLang Builder, merged then deduped them. Finally, we did a secondary check of those URLs that were not in Oncrawl’s API import to make sure that they were valid.

Once we had our initial import of URLs, the system was able to start matching them and creating XML sitemaps.

We have the option in HREFLang Builder that allows you to choose to only export matched URLs or to export all URLs. We selected the “All URL” option so that we could use the output for URLs that did not have an equivalent in each market.

Challenge 3 – Submitting them to Google

Within an hour we had XML sitemaps for every country and language version. We uploaded them to the central server and they auto-pinged Google to notify the search engine that they were ready for indexing.

Challenge 4 – Maintaining the XML Sitemaps

The solution we proposed had to be able to map new URLs across all of the country and language versions in order to keep the sitemaps up to date. For this, we developed a mapping table between the different versions.

Some of the connections were simpler than others. As mentioned, a few of the sites were already using the same structure and URL. And in the case of the ecommerce site, we were able to match pages based on the global SKU even though there was little resemblance between the URLs.

In the case of the brand site, however, we needed to develop a manual mapping table. The first goal we met was to map the critical pages identified by the client where the incorrect version was ranking.

Challenge 5 – Monitoring Outcome

Using ranking reports and Google Search Console Coverage Reports, the internal teams are able to monitor projects.

[Case Study] Improving rankings, organic visits and sales with log files analysis

Lessons learned

Index coverage issues can require creativity to solve, particularly when they involve distributed website infrastructure and individual market teams. But, surprisingly, the biggest technical challenges on sites with many country and language versions are getting a master list of website URLs, and mapping those URLs on one site version to another.

By deploying the centralized strategy we’ve explained here, and by using the powerful diagnostic tools of Oncrawl and the aggregation and management functionality of HREFlang Builder, we were able to collaboratively solve a major problem for these clients and help to to recapture significant lost revenue.

In both of these client cases, their indexing rates increased, and they saw a reduction in canonical errors and better alternate page mapping. This resulted in their traffic and sales from local markers growing exponentially as the correct page was showing in search results and enabling visitors to easily purchase.

Trying to fix these problems within the CMS systems available, or in the local markets, would have been impossible.

This is an example of the out of the box problem solving that we are able to do at Back Azimuth using HREFLang Builder and our technical partnerships.