In the life cycle of a website, SEO website migration phases are important moments during which you have to pay attention to many details in order not to negatively impact the SEO.

Each site migration is different. A migration does not imply instant results but it is the perfect time to improve your SEO. It must be understood that when a site evolves, search engines are not always in the same timing and that it will have a more or less important time of adaptation. However, this is the most opportune time for a website to improve the technical quality, code enrichment with HTML5 and json-ld, siloing thanks to the architecture of the internal linking, editorial richness and return strong SEO signals to search engines.

There are several types of site migration depending on the changes that occur, usually involving one or more of the following elements:

- Hosting / IP Address

- Domain name

- URL structure

- Architecture of the site

- Content

- Design concept

Migration of the most difficult sites involves changes in most (or all) of the above.

If the SEO redesign is properly managed, there will be one before and one after migration. Take the time to understand the site’s pain points and work on correcting them. Improve performance, make crawlability easier. You’ll be a winner in the long run.

In this article, we will provide you with the best practices for a safe SEO website migration.

Key steps, actions and documents of a SEO website migration

A controlled migration is a well-prepared migration. You will therefore be effective if you follow a series of structuring steps. These steps will also allow you to accumulate a set of documents and data that you can rely on throughout the migration cycle and then qualify the good (or bad) impact of the migration.

Here is a table listing the actions and migration documents according to objectives:

| GOALS | ACTIONS | DOCUMENTS |

| Website picture | – Preliminary CrawlAggregate server log data | Crawl Reports Technical files (robots.txt, sitemaps, old redirection files) Table of crawled/non-crawled urls, orphaned pages and frequency of crawl/day for each |

| Compile, aggregate and store data from external tools | – Read the dataGoogle Analytics, Omniture, AT Internet, Search Consoles (Bing/Google), SemRush, MyPoseo, Majestic, SEObserver,… | Export of positions, click-through rates and impressions by expressions/niches Analytical data export Backlinks export, TF/CF, niche, Exporting keywords from niches Keyword search Data Crossover |

| Identification of areas for improvement | – Audit and data analysis | Tasks list |

| Subject prioritization | – Evaluation of gains/efforts | ROIs matrix |

| Specifications | – Evaluation of gains/efforts | Requirement expression with annex for SEO-friendly codes |

| Production | – Development – Knowledge implementation | Team discussion around critical points Quality Code |

| Modifications check | – Technical Recipe – Code validationCode guarantee | Comparison crawl report Daily Correction Report Validation of the tests to be played on the D day |

| Live | – Deploying new code and files (sitemaps, robots.txt, redirections) – Integrity test of the new site – Declaration of the new sitemaps – Force engine crawl with Search Console | Passing the new code in production Ensure up-to-date XML sitemap files Validate Robots. txt optimization Re-direction file if applicable Have the code expected and stable in production |

| Live monitoring | – Crawl Over Crawl – Crawl of redirected urls | Back to data comparisons Report on the development of KPIs |

Data compilation and analysis before migration

Preliminary Crawl

Understanding the problems of a site is essential for the preparation of specifications and migration monitoring KPIs. In order to get to know the site better and detect areas for improvement, we invite you to make a complete photograph of the site before migration.

To do this, launch a crawl respecting the same principles as those respected by Google: taking into account robots. txt, followed by links with querystring. If your migration includes several subdomains, Oncrawl allows you to discover and crawl subdomains.

Example of information on the architecture and health status of a site

If you have the possibility to collect the log data, don’t hesitate to do so for each URL. Crawl/Logs cross-referenced data can be used to identify success factors for a set of pages.

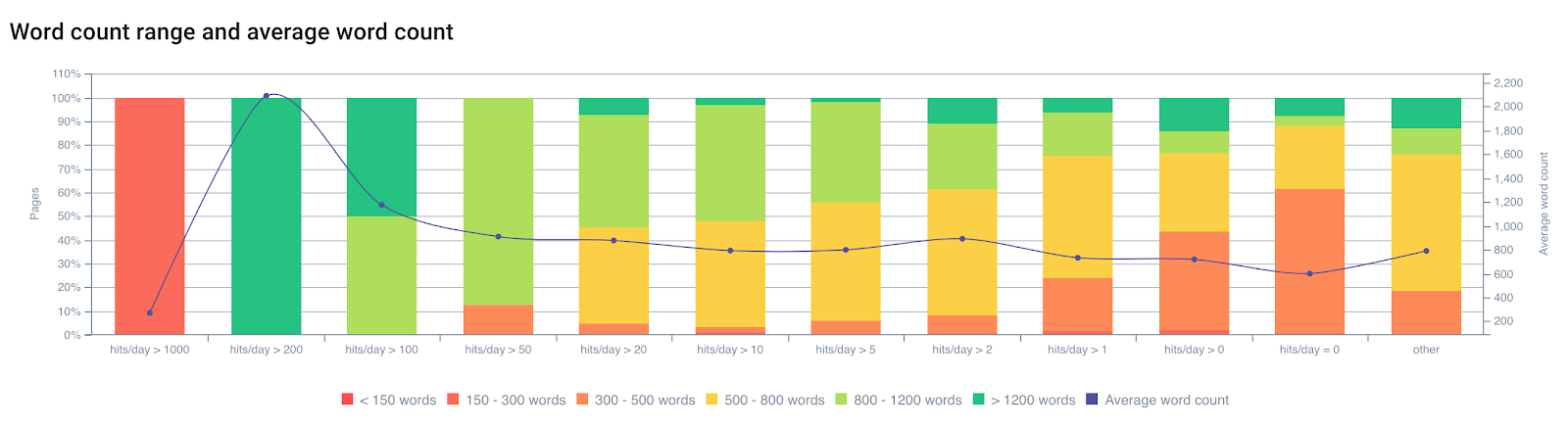

View the number of bot hits/day according to the number of words

We know from experience that Google’s crawl is impacted by several on-page factors. Google does not browse all pages at the same frequency. Its index is not refreshed for these pages, so it will tend to downgrade them. An obsolete page in the index is no longer necessarily the “best answer” to a user request. There is a strong relationship between crawl frequency, index update and ranking.

A page will be less crawled if:

- It’s too deep in architecture;

- It does not contain enough single text;

- It does not have enough internal links (also external);

- It’s not fast enough to load or too heavy;

- … etc.

To learn more about the influence of on-page factors on Google’s crawl, you can read the article “Google Importance Page” which will allow you to learn more about Google’s patents related to “Crawl Scheduling”.

The preliminary crawl allows you to know your site. It will also allow you to classify the pages that will be the pages of validation of optimizations. Your preliminary analysis will have to take these data into account in order to correct the factors that penalize the crawl.

Reading data from external tools

Google Search Console / Bing Search Console / Bing Search Console

On Google and Bing’s Search Console, you will find both qualitative and quantitative data. It is very good practice to collect this data to monitor improvement.

We invite you to cross-check this data again to understand what are the common features of the pages that position themselves, missing keywords or problems related to mobile/AMP or SEO errors.

Important data to store: number and types of errors, crawl budget (numbers of hits per day) and loading time, HTML improvement, number of indexed pages, position/CTR/Impression by expressions and urls.

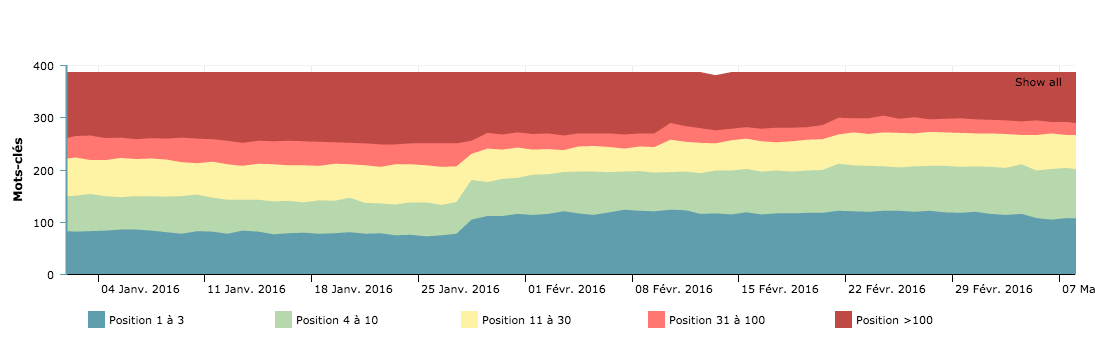

Explored pages by day – Crawl budget curve to be taken to compare before/after migration

These views from the search engines allow you to understand how they interpret your site. It is also the basis for KPI post-migration monitoring. It is important to take into account SEO errors reported by engines to correct them first.

Ranking and visibility

Positioning tracking tools such as MyPoseo simplify the study of site visibility and keywords.

In addition to Google Search Console data, you need to know your visibility rate on your niche. This data will be essential for your post-migration analysis.

Analytics

Tracking and analytics solutions can be used to collect the usage data of your users. They are also a source of information on the performance of your pages in terms of organic visits, bounce rate, conversion tunnels, routes and attractiveness of the site. You need to export data that will allow you to detect areas for improvement and store them for your live analysis.

Exporting all URLs that have received at least one visit in the last 12 months via Google Analytics is one way to retrieve a large number of valuable indexed pages.

Classify landing pages for prioritization of your actions, don’t miss out on unreviewed pages, and once again try to detect performance factors to inspire you in your recommendations.

Segmentation

Following the preliminary crawl and external data recording, you will have a complete view of the migration perimeter. From your analyses, you can create sets of pages that can be grouped according to different metrics to qualify your migration.

With Oncrawl, it is possible to create 10 sets or a set of 15 groups of pages. Why not use this feature to create migration sets?

Create a categorization on each of the areas for improvement. Oncrawl allows you to create groups based on more than 350 metrics – poor, heavy, duplicated, not visited by robots, little visited by users, with too few incoming links or too deep for example. They will allow you to a posteriori validate the redesign.

Pro tip: You can group all pages that respond in more than 1 second, pages that have a bad crawl rate and with the crossover of ranking data and human behavior – CTR on GSC or Bounce Rate on analytics. You will be able to make the right decisions.

Cross-checking crawl, log and analytics data is the best way to perform a complete and realistic analysis for your migration.

Pages to edit, create, delete

Thanks to the collected data, you will be able to identify pages with the most visits, pages with the most important duplicate content rates, missing pages in your architecture, etc.

You should keep in mind that the restructuring, in addition to correcting the most penalizing SEO problems and SEO compliance, should also allow your site to be enriched and realigned around key sentences of engine users. They search with their own words for data present in your pages. The work of exploration and semantic adjustment is a task often neglected, yet it allows the best performance in terms of ranking.

New hub and landing pages need to be added to best suit users’ needs. They must be aligned with the queries on which the site is least well positioned.

[Case Study] Keep your redesign from penalizing your SEO

Internal linking management

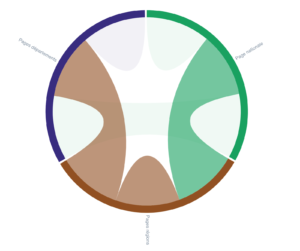

Internal linking is now more than ever one of the key factors for a successful SEO restructuring. The variation of anchors, the optimization of internal popularity diffusion and the semantic alignment of anchors are powerful allies.

Representation of an optimized internal linking with Oncrawl

Take the time to understand how the popularity of your pages is spread through the various link blocks. Try to reduce irrelevant links on your pages as much as possible. The best example is the mega-menu.

Avoid making links to all pages from all pages; this is counterproductive. Remove links that are not from the same silo from the HTML code – while retaining links to category headers.

Navigation is also a robot trap. Try to create shortcuts between pages using an algorithm that allows you to jump to the nearest units, tens, hundreds.

Try to vary the anchors that point to the pages as much as possible. This allows you to show engines that you are using a wide semantic field and favors the page importance score and thus the crawl of the pages.

Also think about reducing or segmenting the footer links to avoid creating unattractive and very popular pages. The CGU or newsletter subscription links as well as outgoing links from your domain are as many internal Page Rank leaks. Also watch out for links to social networks, these sites are often bigger than yours and they suck up the Page Rank of your site.

Redirection

This activity requires a lot of attention because things can go wrong. It is important to retrieve the current redirection rules so that you can then match them to the new tree structure, if any. The old redirection rules need to be updated to avoid redirection strings.

It is also necessary to check that all old urls redirect in 301 to the new ones.

Test crawls can be made by exporting the list of all old urls and adding them in start urls with a maximum depth of 1.

For the particular case of HTTPS migration (with or without url structure change), make sure that all internal links have been updated (no internal links from an HTTPS page to a HTTP page). There are Customs Fields Oncrawl to carry out this operation.

Pro Tip: Create pages of shortcuts to your pages to be ranked (product pages thanks to the hub pages), improve loading times, increase the volume of content of pages, create sitemaps and correct orphan pages, canonical pages, reduce duplicate content…

It is important to emphasize that high traffic pages need special attention, but in SEO every URL counts.

It is important to emphasize that high traffic pages need special attention, but in SEO every URL counts.

The recipe phase

This essential phase of migration is the period during which you need to be on the lookout for any mistake in source codes or in understanding expressions of need.

Protecting the search engine development platform

The htpasswd is the recommended solution to avoid seeing your crawled staging urls by search engines. This method also ensures that you don’t run a blocking robots.txt file or meta robots “disallow”. It happens more often than it seems.

Pro tip: Your site in development MUST be ISO to guarantee the code of the future site.

Check the quality of each code delivery

During this phase, you have to check that the site is moving in the right direction, by launching crawls regularly on the recipe platform. We recommend that you create Crawl Over Crawl to compare two versions together.

Don’t hesitate to check it out:

- Link Juice transfer and internal linking;

- The evolution of page depth;

- Errors 40x and links to these pages;

- Errors 50x and links to these pages;

- The quality of title, meta description and robot tags;

- The quality of source code and duplicate content elements.

Make sure that the code is W3C compliant. We often forget it but it is one of Google’s first recommendations, that semantic data do not contain errors, that source codes are at the level of ambitions and that optimization rules are applied throughout the perimeter.

Pro tip: old redirection rules need to be updated to prevent redirections from pointing to new redirections.

It is necessary to check that all the old urls redirect in 301 to new ones. Test crawls can be made by exporting the list of all old urls and adding them in start url with a maximum depth of 1.

Sitemap. xml files

When the code is stable and all urls are discovered by the test crawler, you can export all the urls of the site. This allows you to build your sitemap.xml file (or sitemap_index.xml).

Add the addresses in your robots.txt and place them at the root of the site (as recommended by the sitemap specification available here)

Pro tip: Create a sitemap by large section of the site will allow you to independently track the indexing of each part of the site in the Google Search Console.

Create a sitemap file with all your old redirected urls to force the engines to pass on each one.

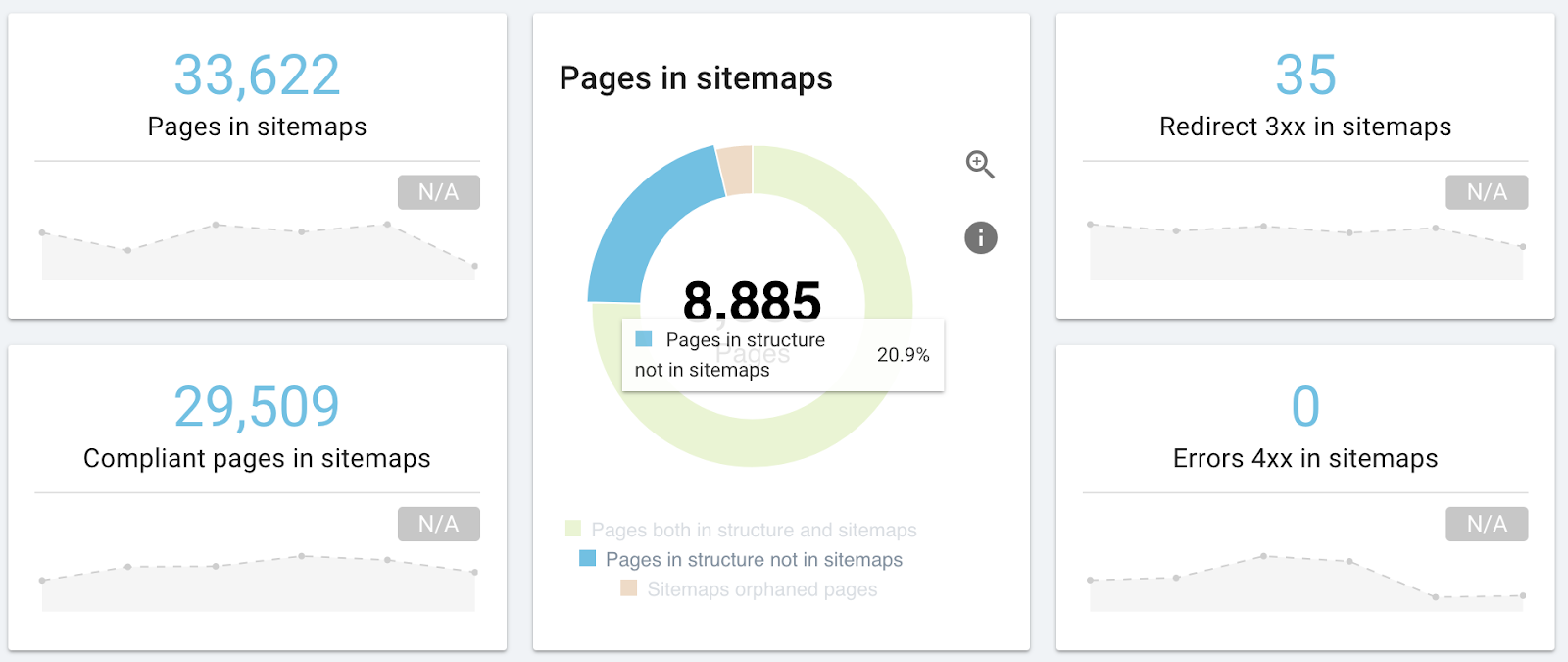

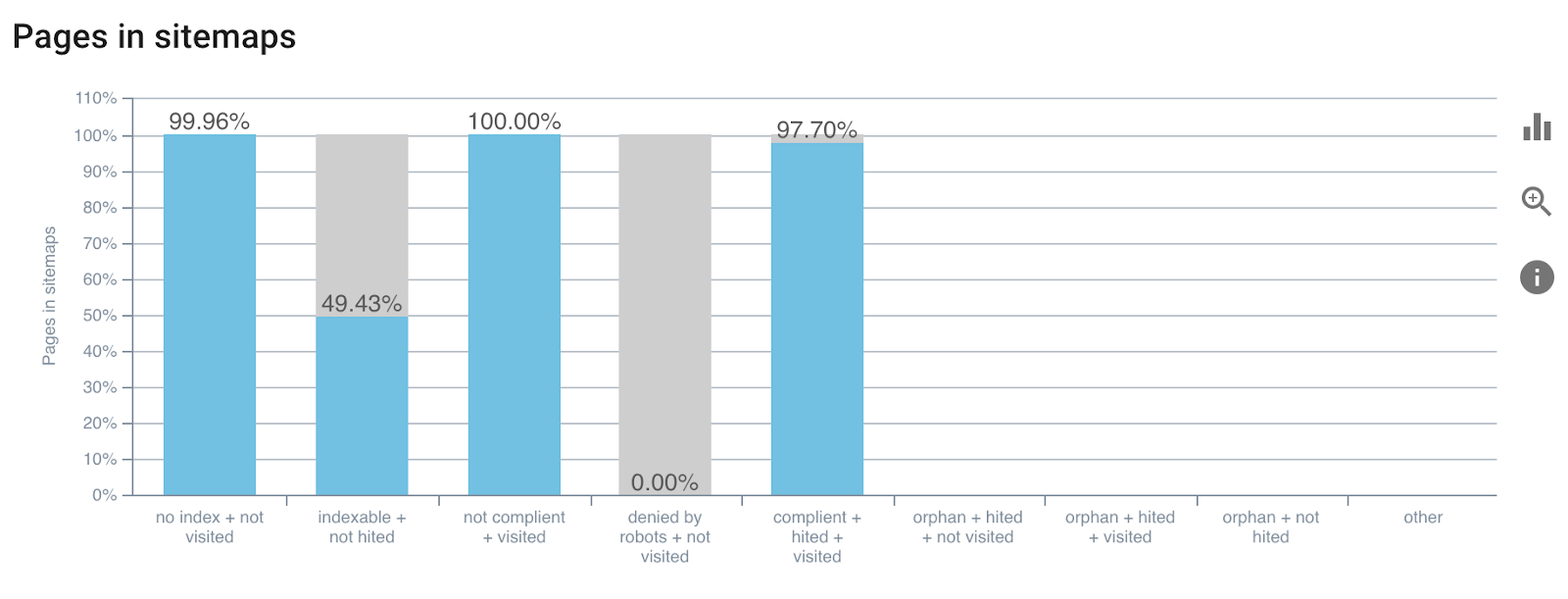

The latest version of Oncrawl will include a report related to the study of sitemap.xml. It will allow you to validate that you have not missed urls, that your sitemaps do not point to pages whose canonical is different from the url or that they point to 404.

Example of the sitemap analysis report

To learn more about sitemaps. xml.

The day of transition to live version

The recipe is finished and the code is validated by all players. So you have all the signals in green to pass the new code live. The SEO will not be impacted because the important pages deserve special attention. However, you are also the guarantor of all the actions to be carried out on the Google side.

Here’s a list of your tasks as an SEO:

- Check robots.txt file;

- Check the code online;

- Check online sitemaps;

- Check redirections;

If everything is okay:

- Force motor crawl

- Check logs and status codes live

You can launch dedicated crawls for each step, the objective is to qualify the pages so use crawls on tighter perimeters to gain in reactivity.

Pro tip: define crawls with maximum depth 1 to test the reference pages of your changes, without testing the whole site.

Live follow-up

Following the online launch, you must be able to compare SEOs before/after and ensure that the migration has had positive impacts or minimal negative ones.

Once the new site is up and running, the impact of any changes will need to be monitored. Monitor rankings and indexation on a weekly basis as a first step. It is estimated that you will have to wait a short month to draw conclusions, so you must be patient.

Check site performance in Webmaster Tools for unusual anomalies after migration.

Follow the indexing rates and caching of your pages by search engines before checking rankings.

As we said in the introduction, the engines will take some time to adapt to your new version and the positions may be quite volatile during this period.

Launching Crawl Over Crawl (Crawl Over Crawl Comparison) before and after migration to compare your data is the best way to increase efficiency.

Conclusions

Migration is an important and often beneficial time for the SEO. Oncrawl will accompany you through these phases. From the initial crawl to the follow-up of validation metrics and the analysis of search engine reaction thanks to logs, your operational work will be simplified.