All practitioners should know that in order to rank high in today’s SERPs, you must have high-quality content that meets the intent of end users and that is useful and helpful in the moment. But what about technical SEO? If your site is not accessible to search engines, no one will see your great content–and that is where technical SEO comes in.

Technical SEO ensures that search engines can find, index and understand your content.

We live in a fast-past world where more and more tasks are being done by computers, and technical SEO is no exception. But what do you gain from using digital tools for technical SEO tasks? Let’s explore by looking at the following:

- Speed and Revenue

- Content Discovery

- Traffic Losses

- Opportunities for Content Expansion

- Opportunities for Structured Data

Speed and Revenue

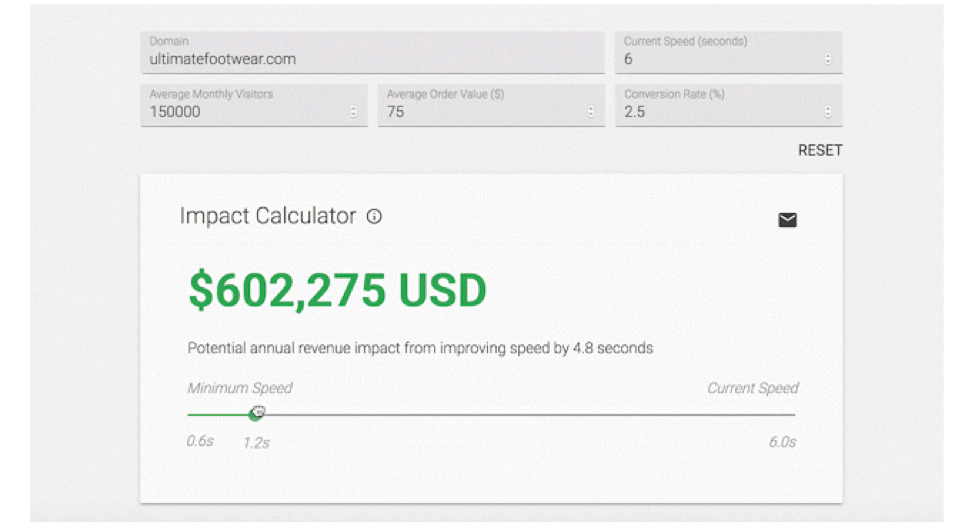

We all know that mobile experience is very important, and your pages should load in under 2 seconds. SEO crawler give you the flexibility of entering a URL, crawling your entire website and determining which page load speeds are fast, medium or slow. Now imagine that you’re an ecommerce company that converts well on the PPC side for “Health Insurance Quotes New York” , but that term has a CPC of $12.00 and is very expensive to maintain a number one position. You should target that keyword on the SEO side, especially if you’re currently ranking at the bottom of page one and your page loads in six seconds. A long load time is causing you to leave a lot of money on the table because fast loading webpages can bring more revenue.

[Ebook] Technical SEO for non-technical thinkers

SEO tools like crawlers can keep crawling your site and give insights into what pages are slow and what pages need help. Marry that with PPC information, rankings, revenue, and the opportunity to make more money, and you’ve got your business case right there.

Another great thing about relying on technical tools is that they allow you to see where the problems are. More and more websites are using JS frameworks and libraries such as Angular and React. SEO crawlers allow you to crawl JS sites to find out if there are any hindrance factors that could be causing an issue in Google and if you need to use dynamic rendering as a way for Google to crawl, index and rank those pages in search. Imagine if you had to do this manually, especially if you had an ecommerce site with thousands, if not millions of pages. Believe me, that would not be fun.

Content Discovery

In order to compete with your competitors, you must always think and act like a publisher. You must continue to create content to satisfy your user’s information need and be in front of your target audience at the early stage, mid stage and purchase stage.

Google Search Console does a great job at letting you know which content is in Google’s index. If you’re creating new content, all the pages must be linkable from another page so Google could find it. You can also submit new content using an automatically generated XML sitemap that will help Google and other search engines find new pages dynamically.

Traffic Losses

In my over 14 years of optimizing the sites of some of the world’s largest brands, I can’t tell you how many mistakes I have seen big corporations make. One noticeable mistake I consistently see is that when a company redesigns or replatforms their site, the noindex/nofollow from the staging environment is pushed into production. When someone sees a big dip in traffic then they start to panic and wonder what in the world is going on.

Instead, scheduling your crawl with SEO-oriented technology would be a good way to catch those errors by crawling the production site and having alerts set up to notify you if something gets added, changed or deleted like a noindex, nofollow, robots.txt that disallows the entire site, etc. This is a common problem that technical tools can easily solve and help you manage your sites with peace of mind.

Find Content Opportunities for Content Expansion

One of the features I like best about using SEO technology is the ability to look for duplicate content, near duplicates and or thin pages. We all know that search engines frown upon duplicate content and finding out which pages are duplicates could help solve this problem by either blocking out the pages, adding canonical tags or creating new content to make those pages unique.

It’s also helpful to find thin pages and pages that don’t get any visits but have some rank beyond the first three pages. Pages that have a limited amount of text probably won’t provide too much value from an end user perspective. Content should be helpful and useful in the moment. If your content is helpful and useful and people engage with it, then you’re fine. If your content does not resonate with end users and the engagement rates are poor, then you might want to expand your content to cover off on different intents, and help users find information that satisfies their information need. Having a crawler automate this process is a time saver and can help your site become visible for more keywords.

Opportunities for Structured Data

Giving search engines information in a way they can understand is a great way to improve clicks, impressions and make your content stand out. Using enterprise-level technology for technical SEO allows you to find those opportunities to see if you have structured data or if you are missing structured data or if your site has any errors in your structured data markup.

Wrapping Up

Technical SEO tools have come a long way and most SEO crawlers have reinvented themselves to not only look at your site from a technical perspective, but look at content, links and integrate with analytics platforms and Google Search Console. This allows you to get a holistic view of your website performance and to uncover opportunities to improve your site, get more traffic and increase your sales and conversion rates.

Taking advantage of modern SEO technology can also save you time, help you with your SEO strategy, drive results and hit your client’s key performance indicators. Don’t think of relying on tools instead of manual work as a bad thing; think about it is a good thing that can help you in the long run by letting you find crawl errors, content that is blocked, and much more.