Indexing is a critical step for SEO. It refers to the process in which search engines, like Google, store and organize the content found during the crawling process. Once a page is indexed, it’s in the running to be displayed as a result for a relevant query.

The indexing status of a page is totally binary, unlike pretty much any of the other aspects in SEO. Essentially, that means that when your URL gets in (it has been added to the index), the step is complete and you can move on to more refined aspects of the SEO strategy.

On the other hand, if you’re struggling to get indexed, that means there is no chance of showing up in Google results at all. You’re not even a part of the race to the top of the rankings. It stands to reason then that if this is your issue, it’s your most serious SEO problem.

The indexing status of a page can change in time – it’s not a given forever. However it is safe to say that it is unlikely for a quality page with no technical issues or sudden changes to just drop out of the index on its own. In other words – it’s binary real-time, but not as a constant.

You may be surprised to learn that international-level indexing is not really different from the standard local-level indexing – Google systems don’t favor or discriminate international sites over the standard ones.

Let’s make sure you’re taking the right steps towards getting your international pages indexed.

Indexing vs. crawling

Before we get to the heart of the matter, I want to emphasize that crawling and indexing are distinct yet interconnected processes. Crawling is the discovery phase where search engine bots, known as crawlers or spiders, find new and updated content.

Indexing is the subsequent step where this content is analyzed and stored in a search engine’s database. Indexing issues are often mislabelled as such while in fact the problem lies in crawling. In order to understand the intricacies of both processes, listen to the Oncrawl podcast episode about crawling and indexing or check out this e-book. In this piece, we will solely focus on the indexing part.

Importance of correct indexing in international SEO

It’s important to be aware that the indexing issues on multilingual, international sites can become much more severe due to the fact that one particular indexing issue often gets replicated throughout all language versions, therefore it’s multiplied in comparison to a one-language site. On top of that, international sites are typically more susceptible to indexing problems due to their complexity.

A multilingual CMS means extra tech stack and more URLs to handle, which leads to more risk of experiencing nuanced issues per language/country version. If on top of all that, the language versions aren’t fully translated or have unique pages per version that other languages don’t account for, it is really easy to have serious indexing issues, and what’s worse, have them go unnoticed for longer periods of time.

Common technical issues in indexing

There are a dozen reasons why a page would struggle to get indexed by Google. To simplify the explanations, we’ll examine the reasons by category; noindex tags and accidental indexing issues.

Wrong use of deliberate noindexing methods

The most common reason behind the fact a page or a subset of pages hasn’t been indexed is an implementation of a solution that blocks indexing. While these methods are there to stop indexing on purpose, they often get implemented by error or due to a lack of understanding regarding how they work.

Meta robots noindex

The classic example is the use of meta robots noindex left on a page or even an entire site, by accident. Meta robots is a directive and not a hint, which means that once discovered by Googlebot, it will be obeyed with no exceptions.

Noindex can be a part of the HTML code, in the form of a meta tag, however there is another, less-known method of implementation: x-robots HTTP header. When troubleshooting noindex tags, make sure you check this implementation as well.

Modern CMS like WordPress allow you to implement noindex tags in your HTML code within just one click, via famous SEO plugins like Yoast or All In One SEO, which causes common activation of a noindex tag by accident. And of course, books can be written on the noindex tags left by the developers after a site migration.

[Ebook] International SEO: Part 1

Canonical tags

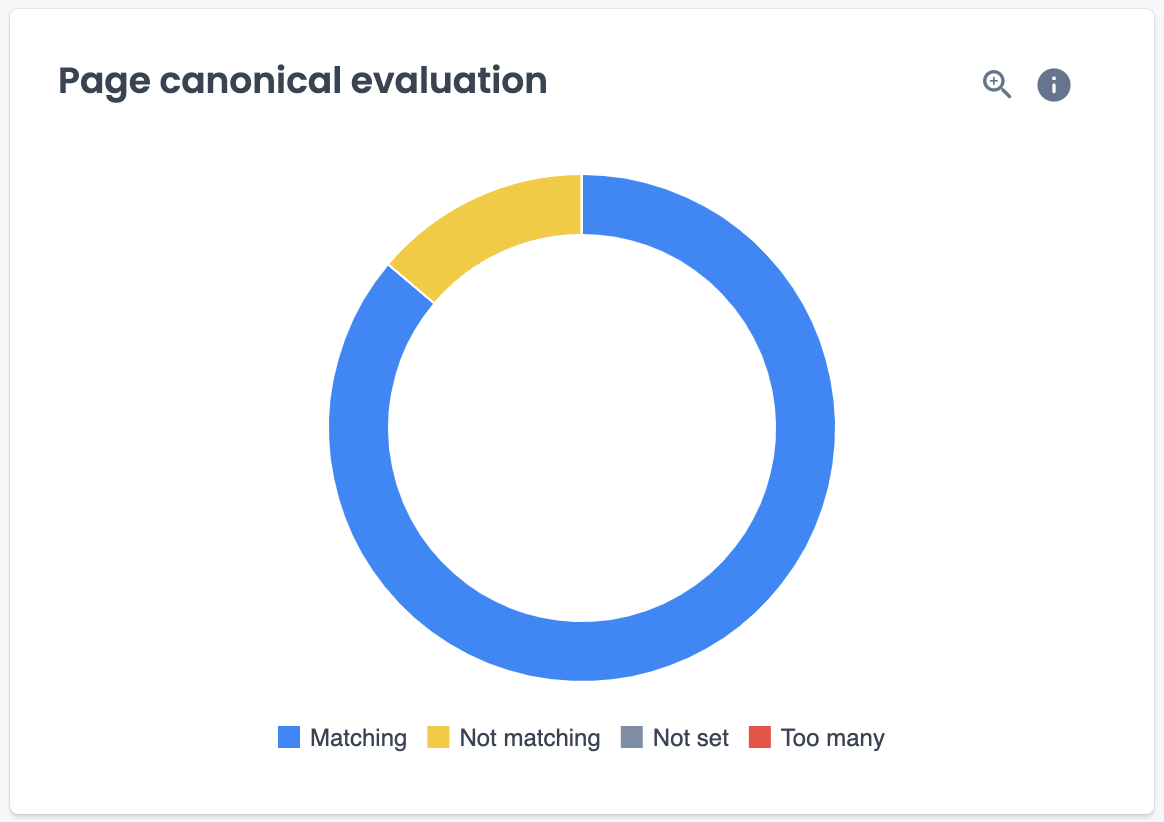

Canonical tags are only a hint for Googlebot and not a directive, however they can still mess up your site’s indexing if implemented incorrectly. This mistake is not as common, but for this reason it can be easily overlooked.

Source: Oncrawl

Be sure to verify if your canonicals are intact and make sure they don’t send conflicting signals. Additionally, when dealing with canonicals of an international site, remember that each language/country version is a canonical version, even if it’s been translated from another one.

Accidental indexing issues as an aftermath of other problems

Indexing issues can also be a symptom of a technical SEO problem that isn’t directly related to indexing. Typically they come as an aftermath of a combination of issues, like the following.

3XX redirects

Excessive redirect chains and loops, with incorrect response codes in place is a common issue if a 302 temporary redirect has been used as one of the redirects in the redirect chain.

It would discourage the bot from consolidating its index, therefore a new URL you are hoping to index might not be successful. If there is a canonical tag conflict also involved in the redirect chain issue, that’s one more element that could block the indexing.

Make sure all redirects are intact and simplify all chains so that Google goes through them as smoothly as possible. Make sure 302s are being used only where a redirect is temporary and the index does not need to be updated.

Hreflang

Lack of hreflang in country versions of a page that share a language is the most nuanced reason behind indexing problems for international sites. This scenario applies to multi-country sites where either a language cascade is used to implement the same exact content for multiple markets/countries, or in other scenarios, the localized versions of the same language just aren’t different enough for Google to treat them as unique content.

Let’s take Spanish for Spain, Mexico and Chile as an example. Spanish is the official language in all three cases. All three versions of Spanish in these countries have little difference when looking at their written form. Google does not do a very good job of recognizing the small differences in vocabulary those three Spanish speaking markets have.

Such close similarity, with no hreflang in place, most certainly results in some of the language versions not being indexed. Typically, the one page out of the three close to identical pages that gets indexed and ranked would be the one with the most backlinks or the highest PageRank. Hreflang is the ultimate solution for this specific issue.

Worth noting: if you are dealing with a multi-language setup instead of multi-country (for example, you have a site in Spanish, French and English, but no country specific versions), there should be absolutely no indexing issues for the above mentioned reason. Google distinguishes different languages well and won’t treat them as duplicates.

Orphan pages

When speaking of indexing issues, it’s not just a lack of indexing that can be problematic. There also can be pieces of content indexed when they shouldn’t. That problem is related to orphan pages.

Many organizations have their website managed by a variety of teams that aren’t necessarily web managers by trade. Some site editors might remove internal links on a site, thinking they got rid of the entire piece of content, while the hidden page lives its own life, still indexed and ranked by Google.

If your indexing issues refer to over indexing, check GSC reports or Oncrawl for URLs from outside of your crawl and you might be in for a surprise.

Source: Oncrawl

JavaScript rendering

A new wave of indexing issues at scale is related to JavaScript rendering issues. While JavaScript issues can cause serious indexing problems, it’s not at all unique to the international SEO context. It’s a global issue that would affect the entire domain or the parts of the site indifferently from the country/language setup.

Indexing beyond Google

When talking about international SEO, it makes sense to also address other search engines that operate internationally. Although Google is the go-to that we all think of and talk about when referring to SEO, it’s important to remember that a lot of their practices are developed for U.S. based sites.

Different search engines like Bing, Baidu, Naver or Brave Search have their own indexing nuances that must be considered for a truly global reach. Let’s look at those Google alternatives by dividing them in the regions in which they operate.

Asia

The indexing for international SEO becomes significantly more complex when related to search engines from China and South Korea.

In China, the leading search engine is Baidu and in South Korea, users once turned to Naver; the South Korean search engine once dominated its market. Once refers to the pre-Samsung domination period in South Korea.

When the Android device won over the smartphone market, so did Google as the default search engine. Naver, however, is still around with circa 41% market share, according to Statcounter data from August 2024.

Unusual indexing approach by Naver

Naver has not changed its rules about indexing from the very beginning: a site has to be submitted to Naver in order to be indexed. This is quite a different approach than other search engines have opted to use.

Usually, the search engine bots proactively crawl the web to find indexable content. Naver is the only search engine that requires prior domain submission and verification.

Source: Naver

Indexing rules by Baidu

On the other hand, Baidu, the other Asian search engine from China, does proactively crawl the web but is more keen on indexing when the site has been officially verified in its webmaster tools.

While it might proactively discover, crawl, and index any content, due to its geographical restrictions – Baidu operates only in Mandarin Chinese and in China – the submission process is highly beneficial, especially for a newly launched or translated website.

Source: Baidu

If you run a large website, let’s say in English, and you decide to dive into analyzing your log files, you might discover a lot of activity from the Baidu bot, called Baiduspider.

Baidu does crawl the web outside of China, but it does not index the content at the same scale as Google does. It might be after your homepage and a couple of most linked pages, but not much more.

Also bear in mind that Baidu’s ability to index modern JavaScript frameworks is very limited, as per the latest available research by Dan Taylor from China Search News.

It’s also important to note that Baidu is unique as it comes to its noindex directives. Interestingly, Baidu does not recognize “meta robots noindex” as a valid directive. Meta robots tags that are following Baidu standards are nofollow and noarchive only. Noindex tag is simply ignored by the Baidu bots.

To limit the indexing of a site in Baidu, webmasters need to use robots.txt file. And as indicated in Baidu’s technical documentation,

“If you prefer the search engine to index everything in your site, please do not create the robots.txt file.”

This is a radically different approach than the one taken by Google or other search engines.

Next time you find yourself joining a LinkedIn debate regarding robots.txt and indexing versus crawl blocking, which is a common SEO battle ground, remember that while robots.txt does not impact indexing in Google, it very much does in Baidu.

Yet another reason why international SEO is so unique and interesting.

[Ebook] International SEO: Part 2

Google’s global competitors

Indexing in Bing

Bing and Google seem to handle indexing similarly on the surface, but in fact there are some unique aspects of indexing by Bing that should be taken into account when optimizing for this search engine.

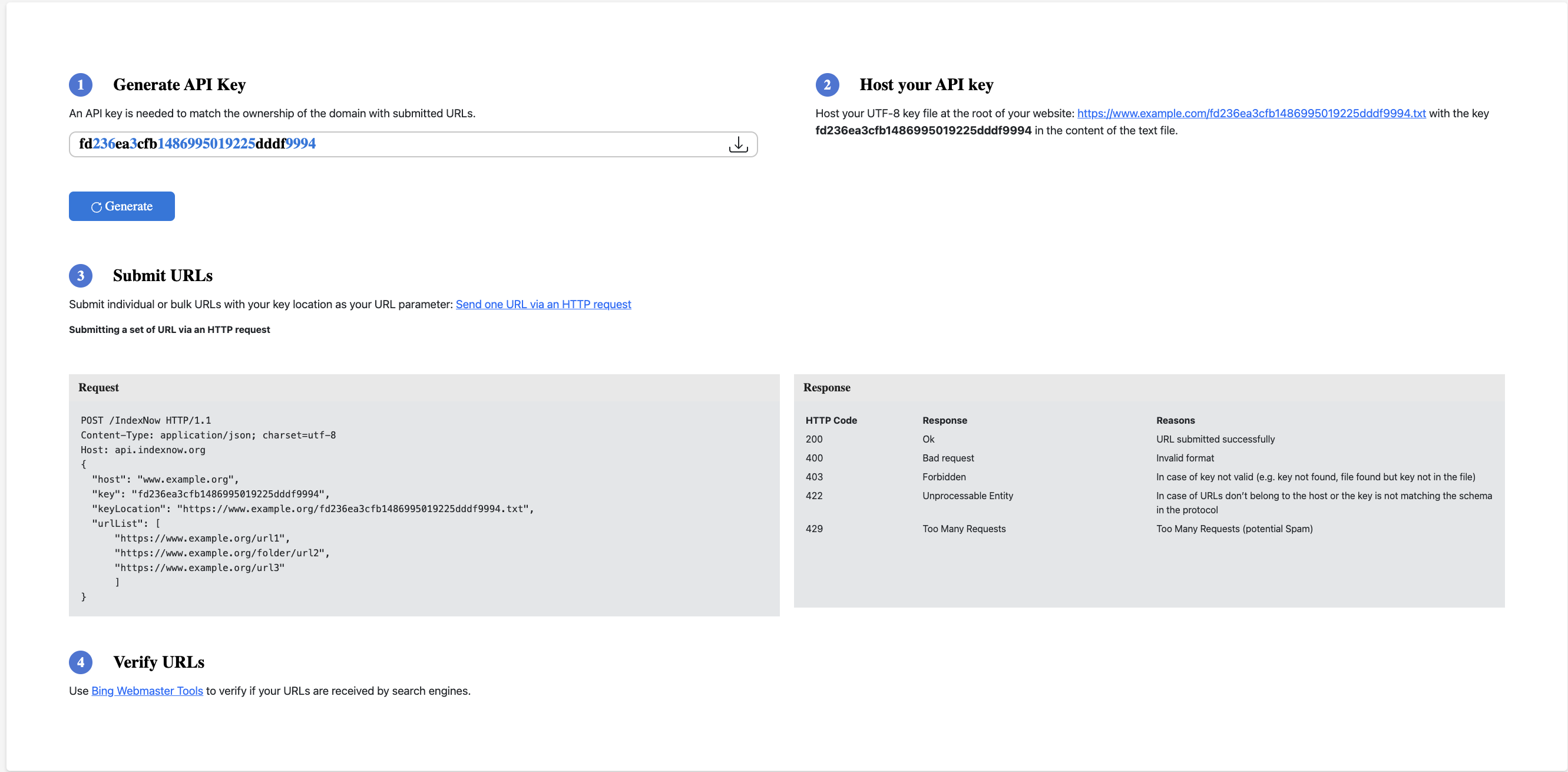

The first notable difference is the IndexNow functionality. IndexNow is an open-source protocol developed by Bing (and Yandex) that enables web publishers to notify search engines about changes to their web content.

This protocol allows faster indexing by sending a “ping” to the participating search engines whenever a URL is added, updated, or deleted. This push-based approach contrasts with the traditional pull-based model, where search engines periodically crawl sites to discover changes.

Source: Bing

IndexNow seems to improve indexing efficiency. It has not yet been adopted by Google, and while there were rumors for this functionality being tested by Google in 2021, we’ve not heard any news on the subject since.

In terms of indexing issues, Bing tends to index new content faster, while Google tends to be more selective, discovering more content but indexing less than Bing. This suggests that Google is pickier with what it indexes, while Bing may index more content but with less selectivity. Additionally, Bing does not use mobile-first indexing, which can affect how sites are ranked and indexed on mobile devices.

The last major difference is related to the meta robots directives. Bing does not recognize the noimageindex directive, which Google does support. This difference can lead to variations in how content is indexed between the two search engines and leaves the webmasters in more control.

Search engines powered by Bing’s index

Bing is much more than a search engine, especially when looking at the subject of indexing. Bing, on top of being its own search engine, also powers several smaller and independent search engines from around the world.

The most known ones to be powered by Bing index are DuckDuckGo, Ecosia, Qwant and Yahoo! Search (powered by Bing everywhere but Japan).

In practice, the fact that so many search engines operate on Bing’s index means that successful indexing in Bing is crucial for any website that wants to reach a global audience beyond Google.

While the small, independant search engines don’t offer webmaster tools (yet), indexing reports in Bing Webmasters are the place to go to analyze and troubleshoot indexing issues.

Ranking, however, will be unique to the individual search engines and powered by their own algorithm, mostly with no personalization in place, which will significantly impact the rankings as compared to Bing results.

Another roadblock the indie, privacy-first search engines might face is not showing up in your GA4 or other analytics data, due to the fact they are… private. This makes any data analysis close to impossible.

While the lack of sufficient data makes it hard to justify a big investment in the SEO strategies for those search alternatives, they should not be dismissed altogether.

Indexing in Brave Search

The only somewhat successful search engine that does not rely on Google or Bing, and has its own independent index, is Brave Search. Brave is a search engine developed by Brave Software, known for its focus on privacy and independence from big tech companies.

Source: Brave

This independence helps ensure that the search results are free from bias and censorship. Privacy is a key feature of Brave; it does not track users or collect personal data, ensuring a private browsing experience.

Additionally, Brave offers unique features like “Goggles,” which allows users to customize and filter search results according to their preferences, and “Discussions,” which highlights relevant forum conversations related to search queries.

Brave Search has encountered unique difficulties with indexing the web – as an unknown bot, it often gets blocked from crawling and indexing via robots.txt disallow: aimed at all bots that aren’t listed. For this reason, the Brave bot is imitating the webmasters by pretending it’s a known bot, e.g. a Googlebot.

If your site does not seem to be indexed in Brave Search and does not come up even for branded searches, make sure your robots.txt isn’t blocking all unknown bots, including Brave.

Brave, similarly to DuckDuckGo and Ecosia, won’t show up in your GA4 or other analytics systems. This search engine is only starting to gain traction and it’s known especially among the tech-savvy and privacy-concerned users, so if your website targets this niche, being indexed in Brave should be important.

Best practices for addressing indexability issues

Strategies for troubleshooting international indexing issues

The best, and free, tools for troubleshooting indexing issues are Google Search Console or Bing Webmasters. Both tools are a fantastic resource for any webmaster, advanced or not.

Other search engines with a webmaster equivalent, that were mentioned in this article, are Baidu, Naver and Yandex.

And let’s remember what we addressed earlier in the article, the fact that Ecosia, DuckDuckGo and Quant – even though these indie search engines don’t offer access to webmaster tools per se – they do count on Bing for their index. Therefore Bing Webmasters is a go-to tool where you can analyze your indexing issues for all the listed sites.

A pro tip related to Bing Webmasters: if you have not yet verified it, you can do so within minutes and with no special access to the domain you are trying to verify. As long as you log in with your Google account that has a verified GSC access, you can import those same verified domains to Bing in a matter of three clicks.

Source: Bing Webmasters Tool

This is a fantastic and simple process that takes into account your existing accesses and straight away replicates them onto Bing. Remember, the GSC/Bing Webmasters data is being tracked from the verification moment onwards, so if you haven’t already, then go ahead and verify that access now. You will thank me later, when you need it next!

Tools for monitoring the progress of international indexing

On top of the GSC/Webmaster Tools, which give you a lot of useful information on indexing, it is important to track the improvements over a longer period of time than the official tools provide, and also, to cross-check the indexing status with other useful info like crawl data, or URL-level click data.

This is possible with the help of tools like Oncrawl, which allows you to integrate external data, through connectors, for comprehensive data analysis.

If you are looking for an index-specific tool with some more advanced features at scale, there is a tool called Zip Tie. ZipTie.dev which integrates with Google Search Console. It helps identify which pages are not indexed and it monitors indexing status over time, alerting you if any pages get deindexed, for example due to a site change or during Google’s Core Updates.

Wrapping up

In conclusion, understanding the details of indexing is crucial for a successful international SEO website, as being indexed is the very first step. The process ensures that your website’s content is discoverable and accessible to users globally.

While indexing issues can be caused by technical errors like incorrect use of meta tags or complex site structures, they can be mitigated thanks to careful planning and the use of tools like Google Search Console and Bing Webmasters.

Moreover, recognizing the unique indexing requirements of different search engines, such as Baidu or Naver, is essential for showing up in those search engines, if they are what your target audience is using.

Additionally, the importance of Bing Webmasters as the gateway tool for indexing in DuckDuckGo, Ecosia and Qwant cannot be overlooked as this is the only source of indexing knowledge in those platforms.

Indexing is something that simply cannot be overlooked or considered a given when it comes to successfully implementing your international SEO strategy.